By Emmanuel Forgues

Hyperconverged technologies (Nutanix, Simplivity, VCE) and companies facing the DevOps paradigm

To survive today, a company faces a challenge: the balance between development time and market demand. It is not possible to wait 6 months to come up with an application. The release of a new OS (Android, IOS, Windows, …) requires immediate reactivity or the company might face a potentially heavy financial penalty or just miss a new market. However, we must not confuse speed with haste when marketing cannot wait, especially in an economic context crippling budgets. Just look at the sad example of the mobile application deployed urgently, at the request of the French authorities to inform on terrorist actions. This application (SAIP), developed in 2 months by a team of 15 engineers functioned for a few hours only after the attack in Nice.

The new convergence and hyper-convergence solutions provide companies with the rationality of an infrastructure to face the challenges of DevOps. The major publishers have different approaches but should eventually be able to integrate a broader range of technologies into a single offer.

The non-named problems encountered in business:

Two technical entities participate in the development of all the companies relying on IT.

On one hand, the development team (DEV) which produces IT solutions both for internal or external use, and, on the other hand, the operation team (OPS) which provides DEV with the necessary tools and maintains them. We see that their goals are often contradictory within the same company, in fact their alignment is a strategic and economic challenge for IT departments.

For convenience we will speak of DEV for Development and OPS teams for Operational teams.

Where is the brake between market demands and technical services? Why is DEV not reactive enough? First answers: because they are hamstrung by overly rigid infrastructure, inadequate service catalog and physical or virtual infrastructure without « programmability » capacity. Why are OPS not reactive enough? It is likely that they are not sufficiently involved with the DEV teams to meet their expectations.

Obviously the physical and virtual infrastructure has to change to become more programmable. At the same time the DEV must be able to program infrastructure and OPS must be able to understand and control the interactions of DEV with the infrastructure. In short, the difficulties between DEV and OPS are as follows:

We will call « DevOps » the necessary confrontation between these two teams. DevOps is the concatenation of English words Development and Operation

The vast majority of analysis highlight that the years 2016-2017 would be the beginning of a massive adoption of PaaS (Platform as a Service), the DevOps and Docker container.

- According to IDC : 72% companies will use PaaS

- Dzone : 45% people are evaluating or already using Docker

- RighScale : 71% companies announce multi-cloud based IT strategy.

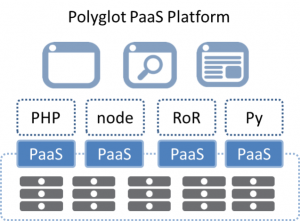

PAAS :

A « Platform as a Service » (PAAS) is a « cloud computing » service providing a platform and a necessary environment for developers with the benefits of the « Cloud« .

This type of services includes :

- rapidly scalable environment without big investment and maintenance costs;

- user-friendly web interface which can be used without complex infrastructure knowledge;

- flexibility to use in different circumstances;

- highly collaborative environment;

- access and data security is handled by the services provider.

Container Docker :

Today, the question of the deployment of existing applications is still valid. But soon we will have to ask the question of the deployment of future applications (container / Dockers). The Docker technology will allow the execution of services on any server regardless of the cloud infrastructure.

Unlike a VM (a several GB Virtual Machine), the container (a few MB) does not carry OS. The container / Docker is lighter and faster to start, move, duplicate (as with any other routine maintenance actions).

Consider a company that has sharply increased resources for urgent strategic development (banking, development companies, …). With a traditional solution to VM, you must have provisioned a large number of VMs on hold and start to make them available. It is therefore necessary to have at the same time the required infrastructure to support the start of these environments: CPU, RAM, storage, I / O and disk I / O network …

By eliminating the OS, containers consume 4 to 30 times less RAM and disk. In this case a few seconds are needs to start applications. Containers / Dockers are to applications what virtualization was to servers in the 90s.

Today container usage makes development easier by automating integration. Continuous integration automates source code updates from DEV, QA, pre-prod and production.

We highlight the problem of the start of production of these containers which cannot be done by IT staff as network control, security, storage, backup, supervision … are not properly integrated into the programming and configuration Container. Therefore, the containers will be the software response to DevOps as used by DEV consuming infrastructure, while being under the control of OPS.

For good integration, publishers already offer orchestration solutions to manage load balancing, management of resilience, encryption, etc.

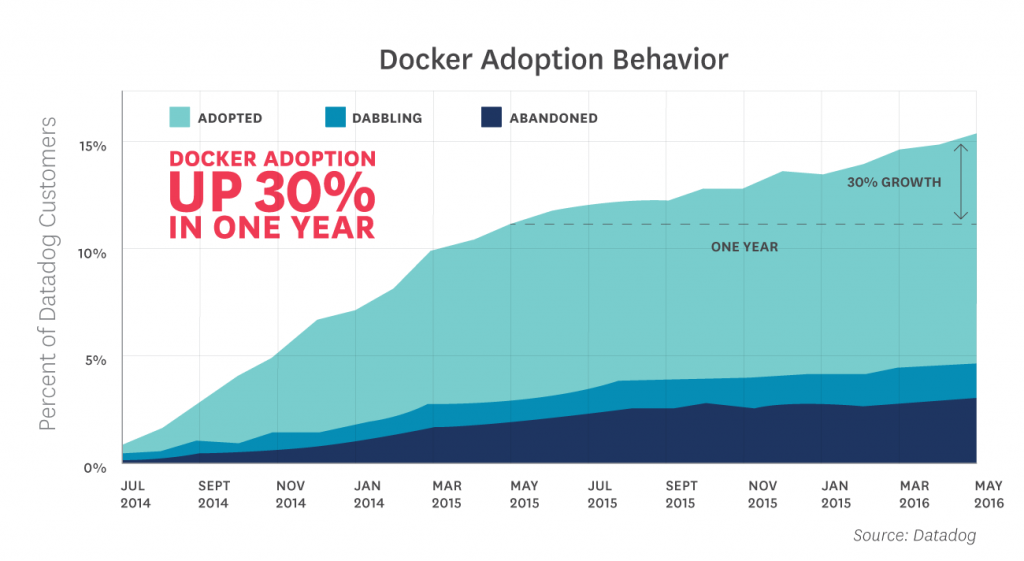

According to Datalog, Dockers adoption was multiplied by 5 in 2015:

The Container Dockers quickly bring all their advantages as DevOps solution!

The problem named is the DevOps:

According to Gartner, the DevOps exceed the stage of the niche in 2016 with 25% of very large global companies who have adopted a market of $ 2.3 billion. According to IDC, in France 53% of companies have committed widespread DevOps approach to all their developments.

According to Gartner, the DevOps exceed the stage of the niche in 2016 with 25% of very large global companies who have adopted a market of $ 2.3 billion. According to IDC, in France 53% of companies have committed widespread DevOps approach to all their developments.

Faced with the difficulties for companies to transform their traditional infrastructures into an agile enough solution, cloud providers can offer DevOps of implementation with their scale-out approaches and proposals of economies of scale.

The implementation of DevOps requires looking at several issues: structural, organizational, resource, etc. For the CIO, who is responsible for the technical answer, it is a nightmare to implement an agile infrastructure with the current traditional equipment. He has to manage both complex environments, multiple servers (storage, computing, networking, security) as developments Services (Multiple databases, WEB portals, software developments like Python, C ++, the libs and other APIs, supervising applications and performance analysis …). Containers technology and overlays networks provide relevant responses to network problems, in the case of the administrator of these services anticipate their arrival. Everything have to be realized in record time in difficult conditions as external market pressure, the internal pressure of marketing and with limited budgets. Once it is all in place, it must finally take maximum advantage for all applications to interact with each other properly while being able to manage rapid migration needs. And for sure, all of this without impacting the entire infrastructure.

- Number of physical or virtual servers

- Many developing services

- Ensuring stability and interactions face of increasing complexity based on the number of servers and services

- Implementation time is being increasingly short

- Strained budgets

- Ensure the ability of migration from one environment to another, making sure to keep the three previous points

- Growing market pressure (marketing in the company)

- Ensure the evolution of acquisitions over several years

Excluding traditional solutions, two major trends on the market meet these needs:

- Build / assemble for a Specific « Workload« : the main players being DataBase Oracle Exadata Machine (Hyper-Appliances).

- Integrate all storage, computing power (computing), the network … in a solution that can start multiple workloads (convergence solutions and Hyper-convergences as Nutanix (http://www.nutanix.com) , Simplivity (https://www.simplivity.com) VCE (http://www.vce.com), …) Today these technologies can run existing workloads but should also anticipate the next one.

Infrastructure (Hyper-)converged and integrated systems have the greatest potential to integrate into a solution of DevOps. They offer all the benefits of being expandable, standard able of IT, programmable …

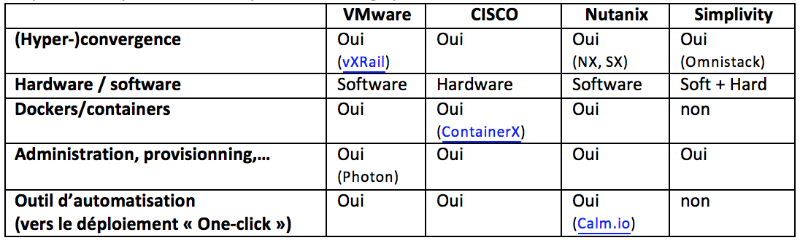

Some players of the market offer in their solutions, all or part of the set of interesting building blocks for DevOps:

- Flexibility of the provision of resources (storage, CPU, RAM, …) with solutions of (hyper-) convergence.

- Provision of the necessary applications with Dockers solutions or containers and applications for rapid provisioning.

- Rapid provision of work environments via a Web portal: 1-Click.

- Reducing complex and costly skills in infrastructure teams

- Total Infrastructure Outsourcing for no longer bear the costs.

Today VMware, CISCO and Nutanix companies can provision VMs as well as containers, Simplivity to date is capable of doing it quickly for VMs only, (but I do not see this company to be outdone long). VMware is able to have a single OS to support multiple containers already simplifying the management of this OS. Nutanix is able to provision their storage containers. The company acquired Nutanix Calm.io but will have to change the product to be able to deploy existing applications as it is already able to do for future applications. All these companies show an amazing ability not to create bottlenecks to address the future serenely.

Today these actors can meet the companies needs to simplify the infrastructure by removing servers as SAN or eliminate the Fiber-Channel, simplify backups and enjoy the DRP (Disaster Recovery Plan) in more acceptable conditions. In a second phase it will be important to take better advantage of this infrastructure to deploy, move … environments. Finally, these same players will then be able to fully meet the needs of DevOps.

Today these players are fighting on the infrastructure market but the core has already moved on funding applications with the associated necessary infrastructure (SDDC). Already some of them are positioned on the infrastructure and at the same time ascend to the software layers. An accountant’s eye on margins should see that they are similar to those of the software companies (85%). All these players are already able to offer simplification but some expect the optimization of these infrastructures (VCE, Nutanix example).

The winner will certainly be the one who will manage to provide a single integrated solution and be capable to deploy both applications of today (Exchange, etc.) and tomorrow (Contrainers / Dockers). Who will be the first to offer the solution « SDDC – all in a box » able to contain all these needs, and … when ?

|

To follow Emmanuel : |

Emmanuel Forgues is Licensed in system network & security engineering in Paris, France – EPITA (June 1997). Licensed in Sciences Politique Paris in Executive Master, topic is international strategie on Cloud market. (December 2012).

Emmanuel Forgues is Licensed in system network & security engineering in Paris, France – EPITA (June 1997). Licensed in Sciences Politique Paris in Executive Master, topic is international strategie on Cloud market. (December 2012).